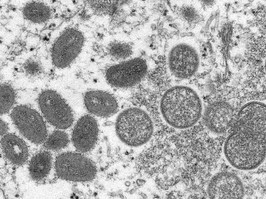

that means people will get served the content that’s interesting to them, which can be a great thing if someone who likes baking gets served videos about cookie recipes. but it’s a lot less constructive if someone who’s already vaccine-hesitant gets served a video about the made-up dangers of the monkeypox vaccine.

“the studies have shown that those algorithms do push information,” caulfield says. “that’s the reasons that a video may go viral, even if it didn’t come from a really popular account, or from a celebrity from a sports star — it really is about these algorithms deciding what to see.”

but misinformation on social media, exacerbated by these kinds of algorithms, is a problem that extends beyond tiktok. nearly half of the covid content circulating on twitter

is likely bots, according to

researchers at carnegie mellon university. earlier this year, a study found that instagram regularly suggested content to users that featured lies about covid, and during the 2020 u.s. election, misinformation shared on facebook received six times the traffic as legitimate news,

the washington post reported.

but as bleak as the misinformation problem is, there are positives to this research too, caulfield says. acknowledging how powerful social media is, and monitoring health misinformation on emerging platforms like tiktok, can help experts figure out how to respond.

4 minute read

4 minute read